Time-Contrastive Networks: Self-Supervised Learning from Video

Pierre Sermanet1*, Corey Lynch1*†, Yevgen Chebotar2*, Jasmine Hsu1, Eric Jang1, Stefan Schaal2, Sergey Levine1

1 Google Brain, 2 University of Southern California

(* equal contribution, † Google Brain Residency program g.co/brainresidency)

This project is part of the larger Self-Supervised Imitation Learning project. It extends the TCN project with Reinforcement Learning and more real robots.

[ Paper ] [ BibTex ] [ Video ] [ Dataset ] [ Code ] [ Slides ]

Abstract

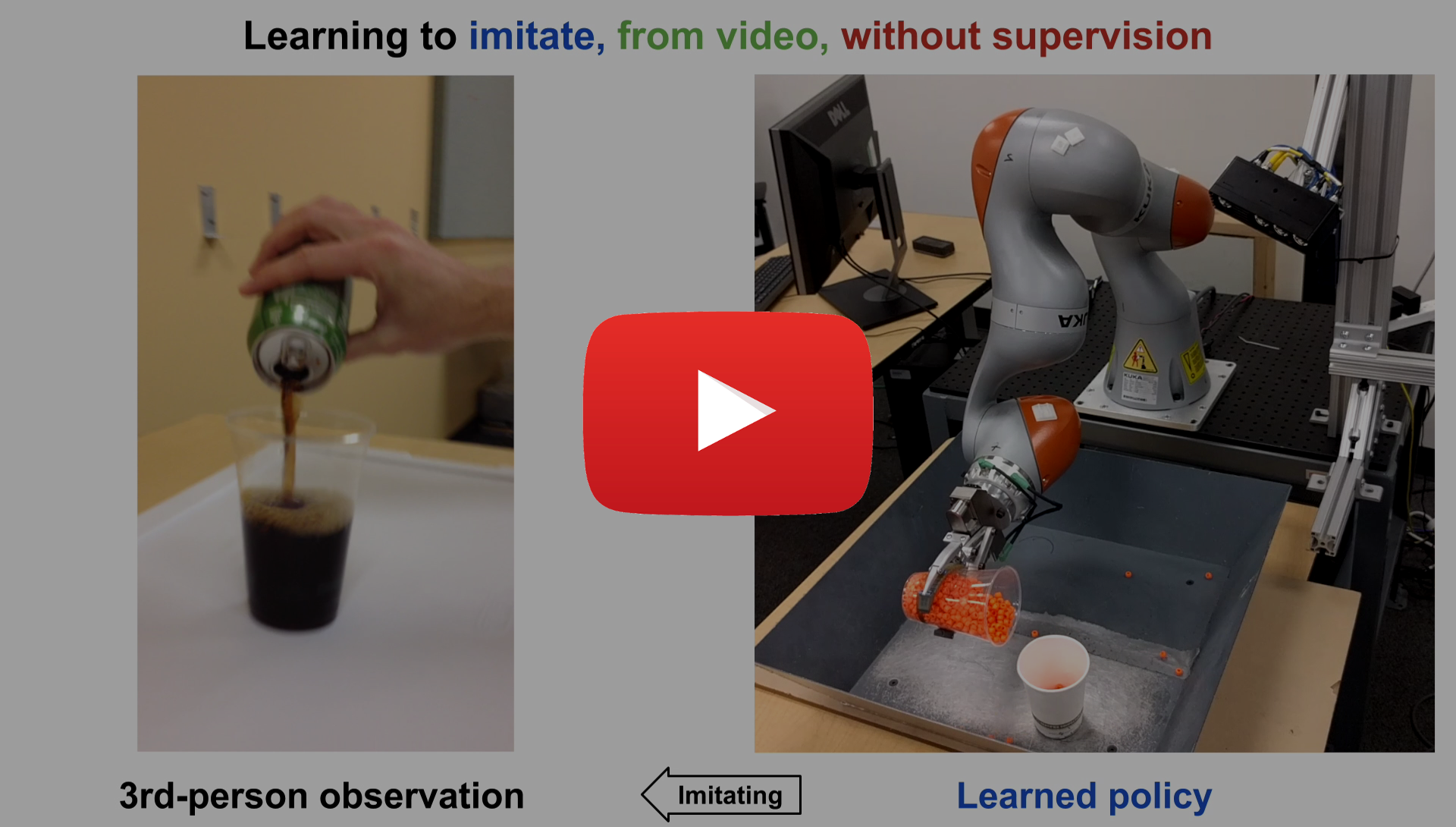

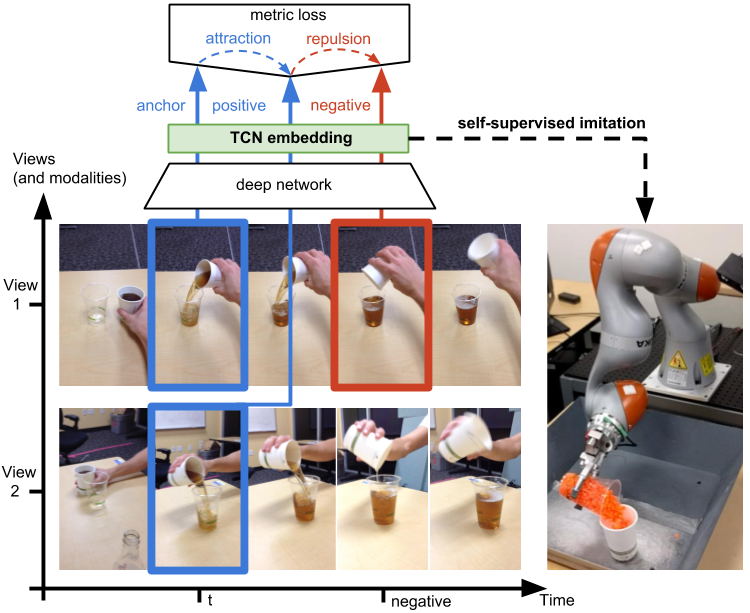

We propose a self-supervised approach for learning representations and robotic behaviors entirely from unlabeled videos recorded from multiple viewpoints, and study how this representation can be used in two robotic imitation settings: imitating object interactions from videos of humans, and imitating human poses. Imitation of human behavior requires a viewpoint-invariant representation that captures the relationships between end-effectors (hands or robot grippers) and the environment, object attributes, and body pose. We train our representations using a metric learning loss, where multiple simultaneous viewpoints of the same observation are attracted in the embedding space, while being repelled from temporal neighbors which are often visually similar but functionally different. In other words, the model simultaneously learns to recognize what is common between different-looking images, and what is different between similar-looking images. This signal causes our model to discover attributes that do not change across viewpoint, but do change across time, while ignoring nuisance variables such as occlusions, motion blur, lighting and background. We demonstrate that this representation can be used by a robot to directly mimic human poses without an explicit correspondence, and that it can be used as a reward function within a reinforcement learning algorithm. While representations are learned from an unlabeled collection of task-related videos, robot behaviors such as pouring are learned by watching a single 3rd-person demonstration by a human. Reward functions obtained by following the human demonstrations under the learned representation enable efficient reinforcement learning that is practical for real-world robotic systems.

Self-supervised imitation

Approach

Citation

@article{TCN2017,

title={Time-Contrastive Networks: Self-Supervised Learning from Video},

author={Sermanet, Pierre and Lynch, Corey and Chebotar, Yevgen and Hsu, Jasmine and Jang, Eric and Schaal, Stefan and Levine, Sergey},

journal={arXiv preprint arXiv:1704.06888},

year={2017}

}

Acknowledgments

We thank Mohi Khansari, Yunfei Bai and Erwin Coumans for help with VR simulations, Jonathan Tompson, James Davidson and Vincent Vanhoucke for helpful discussions and feedback. We thank everyone who provided imitations for this project: Phing Lee, Alexander Toshev, Anna Goldie, Deanna Chen, Deirdre Quillen, Dieterich Lawson, Eric Langlois, Ethan Holly, Irwan Bello, Jasmine Collins, Jeff Dean, Julian Ibarz, Ken Oslund, Laura Downs, Leslie Phillips, Luke Metz, Mike Schuster, Ryan Dahl, Sam Schoenholz and Yifei Feng.